Introduction

1. Methodology

1.1. Temporal convolutional network

1.2. Sparrow search algorithm

2. Data processing and feature engineering

2.1. Sources of data

2.2. Data processing

2.2.1. Padding and dimension reduction

2.2.2. Data integration

2.3. Feature analysis

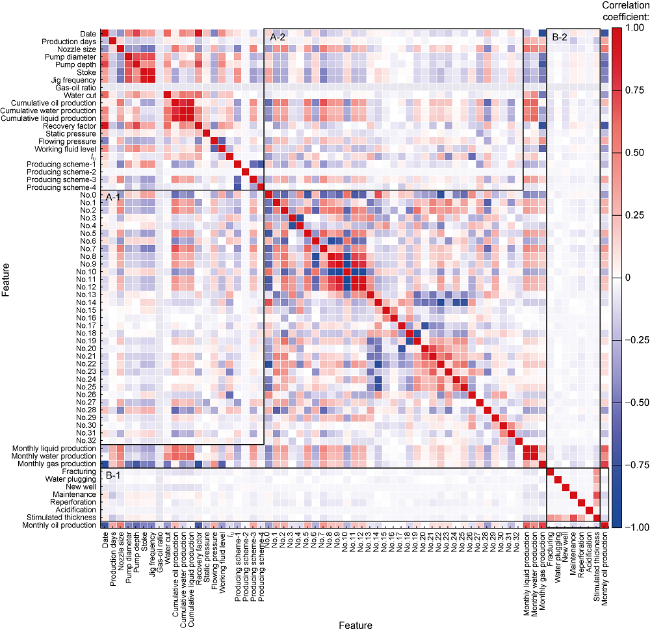

Fig. 1. Feature correlation analysis (No.0-No.32 are abstract features of category and numerical features after PCA dimension reduction). |

2.4. Division of oil well production histories

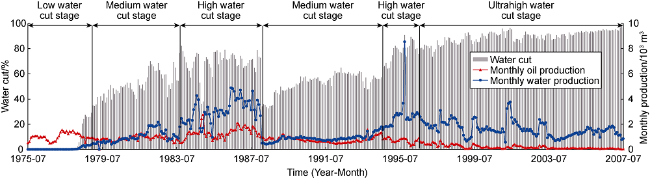

Fig. 2. Schematic diagram of production history division. |

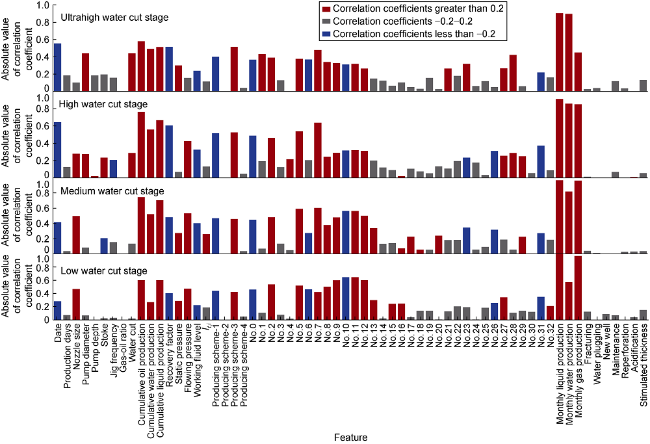

Fig. 3. Correlation coefficient between monthly oil production and influencing characteristics at each production stage. |

2.5. Time sliding window and data set division

3. Model structure design and evaluation

3.1. Model structure design

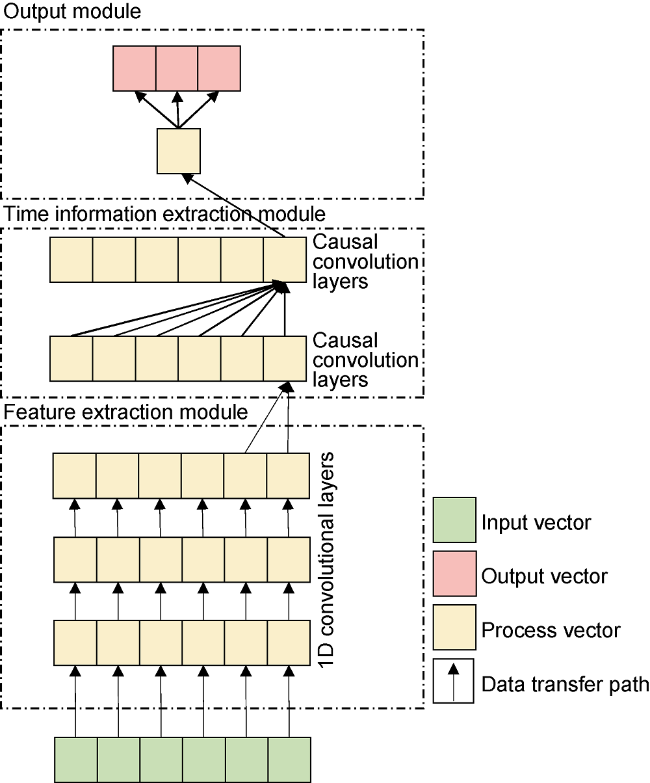

Fig. 4. Structure of improved TCN model. |

3.2. Hyperparameter design of sparrow search algorithm

Table 1. Optimal hyperparameters of the improved TCN model searched by SSA |

| Layers | Filters | Kernel size | Dilatation rate | Function |

|---|---|---|---|---|

| 1 | 46 | 1 | 1 | Feature extraction |

| 2 | 25 | 1 | 1 | Feature extraction |

| 3 | 10 | 1 | 1 | Feature extraction |

| 4 | 46 | 2 | 1 | Time information fusion |

| 5 | 125 | 12* | 1 | Time information fusion |

Note: *—A fixed model hyperparameters, representing by the input time step length in this study |

3.3. Design of comparative models

3.4. Design of the training set

3.5. Model evaluation

4. Application and discussion

4.1. Comparison of different algorithms

Table 2. Comparison of the mean absolute errors in production at the 13th month predicted values of different models |

| Models | MAE of stage models/m3 | MAE of whole life prediction models/m3 | |||||

|---|---|---|---|---|---|---|---|

| Low water cut stage | Middle water cut stage | High water cut stage | Ultrahigh water cut stage | Integrated model | Single model | ||

| Improved TCN models | 23.22 | 17.93 | 13.15 | 19.53 | 17.66 | 20.71 | |

| Comparative models | CNN-LSTM | 30.86 | 18.89 | 19.84 | 21.44 | 21.39 | 21.60 |

| LSTM | 37.55 | 22.71 | 16.98 | 22.40 | 22.35 | 22.55 | |

| Attention-LSTM (T) | 65.26 | 31.31 | 38.95 | 28.13 | 35.72 | 30.20 | |

| Attention-LSTM (F) | 53.80 | 25.58 | 32.27 | 25.27 | 30.28 | 30.20 | |

| Attention-LSTM (T&F) | 75.77 | 38.00 | 42.78 | 31.95 | 40.79 | 40.71 | |

| Self-Attention (F) | 65.26 | 36.09 | 25.58 | 40.55 | 37.63 | 34.02 | |

| Self-Attention (T) | 131.19 | 61.89 | 33.22 | 49.15 | 55.12 | 33.06 | |

| Self-Attention (T&F) | 139.79 | 92.46 | 30.35 | 44.38 | 59.32 | 34.98 | |

| Self-Attention-LSTM (F) | 79.60 | 38.95 | 27.49 | 31.00 | 36.39 | 25.42 | |

| Self-Attention-LSTM (T) | 92.97 | 46.60 | 93.42 | 33.86 | 54.18 | 26.38 | |

| Self-Attention-LSTM (T&F) | 110.17 | 54.24 | 121.13 | 31.00 | 60.60 | 28.46 | |

Note: T—Attention mechanism for time; F—Attention mechanism for feature. |

4.2. Comparison of the correction methods for data padding

Table 3. Comparison of evaluation results with TCN models using samples processed by different filling and correction strategies |

| Data processing | MAE/m3 | MAPE/% | R2 | RMSE/m3 |

|---|---|---|---|---|

| Model proposed in this study | 23.83 | 5.16 | 0.99 | 63.57 |

| Average value without correction | 26.13 | 5.42 | 0.98 | 68.10 |

| Average value with correction | 25.72 | 5.32 | 0.98 | 69.25 |

| Interpolation with correction | 24.35 | 5.29 | 0.99 | 64.02 |

Note: Use the production data of 12 months to predict well production in the next 3 months; the data in table are evaluation results on the error of the predicted production at 13th month. |

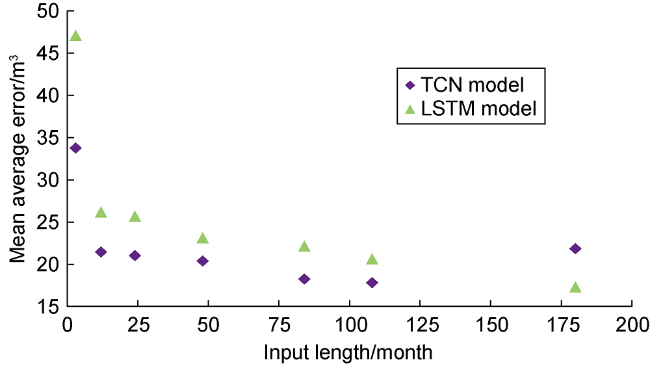

4.3. Comparison of model input step length

Fig. 5. Prediction results of TCN and LSTM models under different input step lengths. |

4.4. Analysis of model structure

4.4.1. Necessity of feature extraction by stacking 1D-convolutional layers

Table 4. Quantitative evaluation results of 3 predicted values by TCN models with and without feature extraction layers |

| Method | MAE/m3 | MAPE/% | R2 | RMSE/m3 |

|---|---|---|---|---|

| Without feature extraction layers (13) | 26.83 | 5.46 | 0.99 | 60.12 |

| Without feature extraction layers (14) | 39.67 | 6.98 | 0.97 | 86.58 |

| Without feature extraction layers (15) | 52.77 | 11.23 | 0.95 | 112.69 |

| With feature extraction layers (13) | 20.71 | 4.96 | 0.99 | 53.57 |

| With feature extraction layers (14) | 35.80 | 6.31 | 0.97 | 84.31 |

| With feature extraction layers (15) | 49.94 | 10.05 | 0.95 | 111.07 |

Note: Use 12 months production data to predict monthly well production in the next 3 months. (13), (14) and (15) are the predicted productions in 13th month, 14th month and 15th month respectively. |

4.4.2. Selection of the output from the causal convolution layer

Table 5. Effects of different output selection of the causal convolution layer on model prediction results |

| Output | MAE/m3 | MAPE/% | R2 | RMSE/m3 |

|---|---|---|---|---|

| Full outputs | 20.71 | 4.96 | 0.99 | 53.57 |

| The last 1 month | 20.81 | 4.97 | 0.99 | 53.59 |

| The last 2 months | 23.35 | 5.14 | 0.99 | 57.47 |

| The last 6 months | 24.12 | 5.28 | 0.99 | 57.53 |

| The last 10 months | 24.83 | 5.31 | 0.99 | 57.62 |

Note: Use the data of 12 months to predict monthly well production in the next 3 months. |

4.4.3. Selection of activation function

Table 6. Prediction results by TCN models with different activation functions |

| Activation function | MAE/m3 | MAPE/% | R2 | RMSE/m3 |

|---|---|---|---|---|

| softmax | 23.63 | 5.17 | 0.99 | 57.32 |

| sigmoid | 23.49 | 5.15 | 0.99 | 55.90 |

| softsign | 20.71 | 4.96 | 0.99 | 53.57 |

| relu | 28.87 | 5.73 | 0.98 | 64.18 |

| tanh | 23.13 | 5.12 | 0.99 | 58.10 |

Note: Use the data of 12 months to predict monthly well production in the next 3 months. |

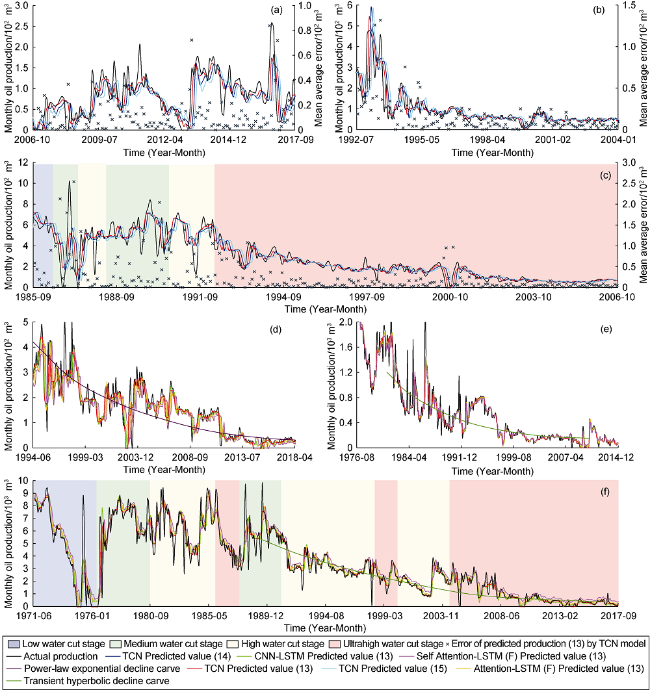

4.5. Discussion of the model application results

Fig. 6. Comparison of predicted and actual production of 6 randomly selected wells (predicted value (13) is the predicted production of 13th month by the model using historical data of the previous 12 months, predicted value (14) is the predicted production of 14th month, and predicted value (15) is the predicted production of the 15th month). (a) Comparison and error distribution between the actual production curve and the predicted production curve predicted by TCN of Well A; (b) Comparison and error distribution between the actual production curve and the predicted production curve predicted by TCN of Well B; (c) Comparison and error distribution between the actual production curve and the predicted production curve predicted by TCN of Well C; (d) Comparison between the actual production curve and the predicted production curve predicted by 5 different models of Well D; (e) Comparison between the actual production curve and the predicted production curve predicted by 5 different models of Well E; (f) Comparison between the actual production curve and the predicted production curve predicted by 5 different models of Well F. |