Introduction

1. Theoretical basis

1.1 Objective functions

1.2. Limitation of conventional objective function calculations

1.3. Classification metrics

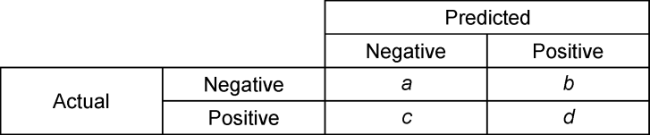

1.3.1 Confusion matrix

Fig. 1. The confusion matrix for two-class classification problem. a—the number of correct predictions for a negative instance or true negatives (TN); b—the number of incorrect predictions for the negative instance or False Positives (FP); c—the number of incorrect predictions for a positive instance or False Negatives (FN); d—the number of correct predictions for the positive instance or True Positives (TP). |

1.3.2. The Matthews correlation coefficient (MCC)

Table 1. Metrics used for binary classification, adapted from Tharwat [10] |

| Binary Metric | Key features | Application | Formulae |

|---|---|---|---|

| Confusion Matrix (CM) | CM measures the correlation between the observed and predicted data as quality of a binary response (true/false), (positive/negative). | The CM allows the application of the different metrics to correlate the data. | |

| False Positive Rate (FPR) | FPR represents the proportion of positive cases that are incorrectly classified as positive from the total number of negative outcomes. | Also recognized as fallout and false alarm rate. This metric is not affected by imbalanced data. | |

| True Negative Rate (TNR) | TNR represents the proportion of negative cases that are properly identified as negative from the total number of negative outcomes. | It is also called specificity or inverse recall. This metric is less affected by imbalanced data. | |

| False Negative Rate (FNR) | FNR represents the proportion of negative cases that are incorrectly identified as negative from the total number of negative outcomes. | It is also called miss rate or inverse recall. This metric is less affected by imbalanced data. | |

| Precision (P) | Represents the ratio of correct predictions that are relevant. When the prediction is yes, how often is it correct? | It is also called “confidence” metric. It does not consider the number of true negatives. | |

| Recall (R) | Measure the accuracy on the positive class. Thus, when the correct prediction is yes, how often does it predict yes? | The metric is valuable to measure the real positive cases that are predicted. The metric is represented as a rate of discovery of positive classifiers. | |

| F-Measure (FM) | It is the ratio of metrics Precision/Recall. It is the harmonic mean of precision and recall metrics. | It considers the ratio of True Positives to the arithmetic mean of predicted positives and real positives. This metric is sensitive to changes in the class distribution. | |

| Accuracy (A) | Represents the ratio between correct predictions to all predictions. The best value is 1 and the worst value is 0. | The metric is not reliable for imbalanced data. It can provide an overoptimistic estimation of the classifier. | |

| Matthew’s correlation coefficient (MCC) | Represents the relation between the observed and predicted classes. | The outcome ranges from +1 to -1, +1 represents a perfect prediction and -1 total disagreement. The metric is sensitive to imbalance data. | Where: A=TP*TN B=FP*FN C=TP+FP D=TP+FN E=TN+FP F=TN+FN |

2. Methodology

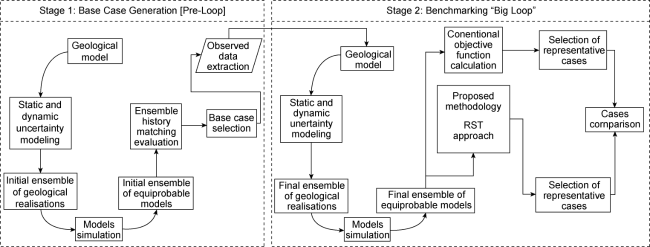

2.1. Benchmarking the proposed methodology

Fig. 2. Modified “Big Loop” workflow. |

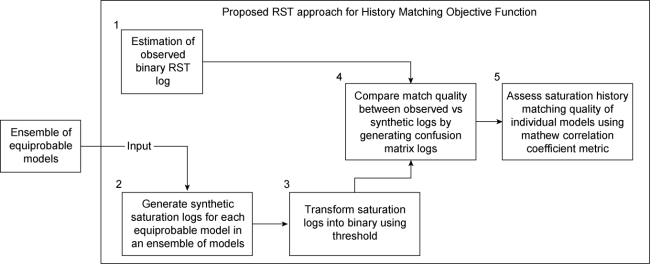

2.2. Proposed RST approach methodology

Fig. 3. Methodology proposed for enhanced history matching process using RST logs. |

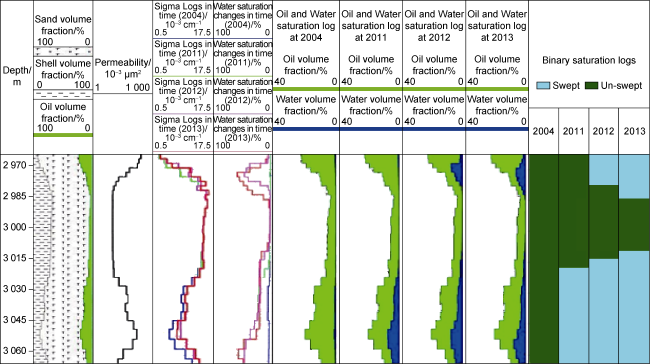

2.2.1. Estimation of observed binary interpretation of reservoir saturation logs

Fig. 4. Illustration of water saturation changes in the reservoir, as identified by cased hole saturation logs and interpreted in “sweep” binary log. |

2.2.2. Generating synthetic saturation logs for each equiprobable model

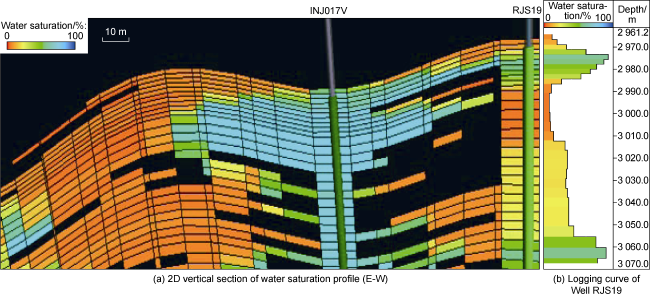

Fig. 5. (a) 2D vertical section of water saturation profile along the well trajectory of a producer and an injector, and (b) the producer synthetic water saturation log. |

2.2.3. Transforming saturation logs into binary logs using a threshold

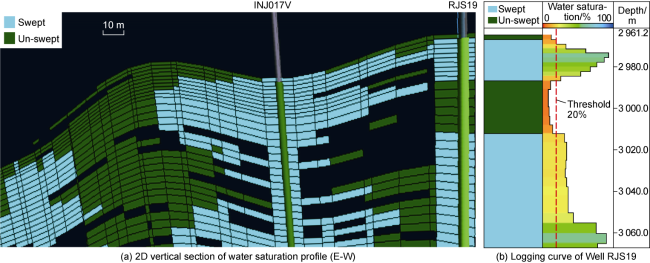

Fig. 6. Swept and un-swept areas of a 2D reservoir model slice highlighting the producer RJS19 and the closest injector at a specific time step. |

2.2.4. Comparing match quality between observed vs synthetic logs by generating a confusion matrix log

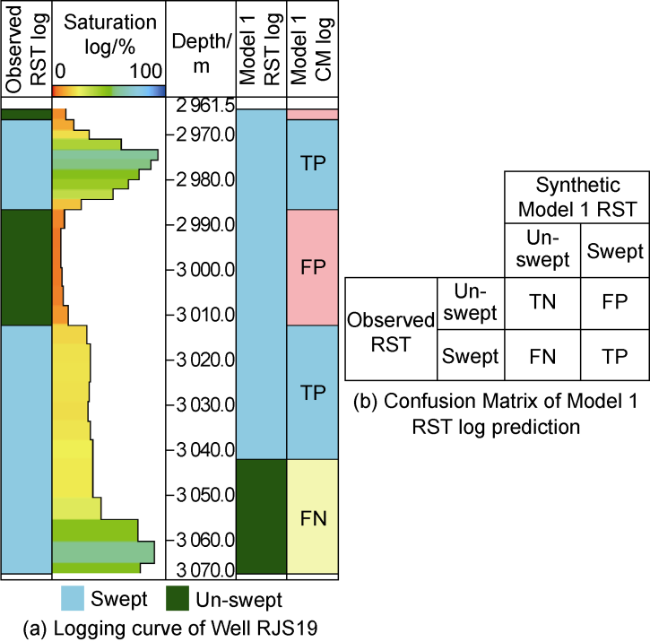

Fig. 7. Confusion matrix log of Model 1 and its corresponding confusion matrix table. |

2.2.5. Assessing history matching quality of individual models using binary confusion matrix derived metrics

2.3. Geological model used

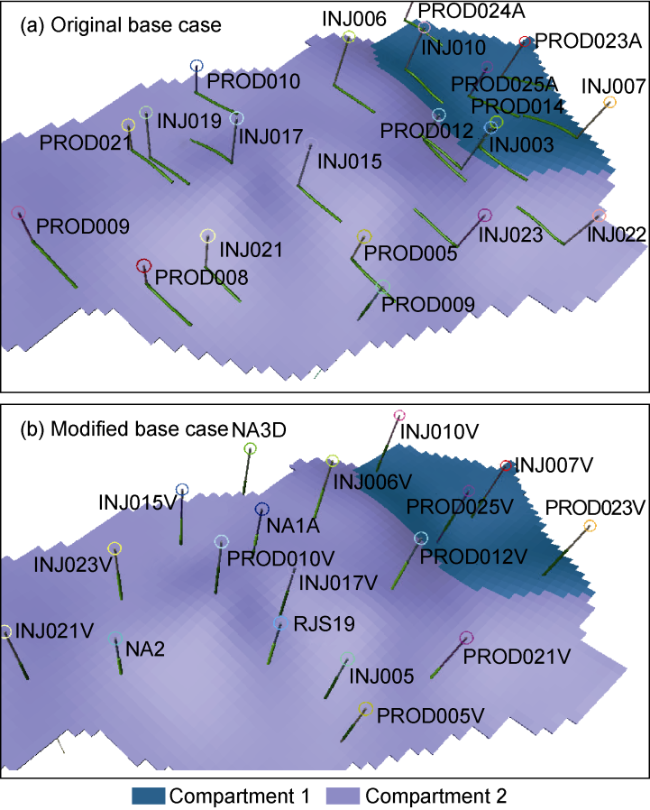

Fig. 8. Injectors and producers well trajectory modification. |

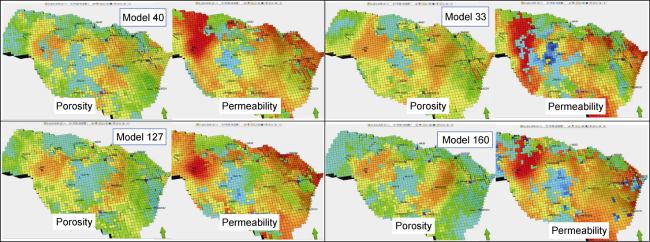

Fig. 9. Porosity and permeability diversity of four randomly selected models from the modified case ensemble. |

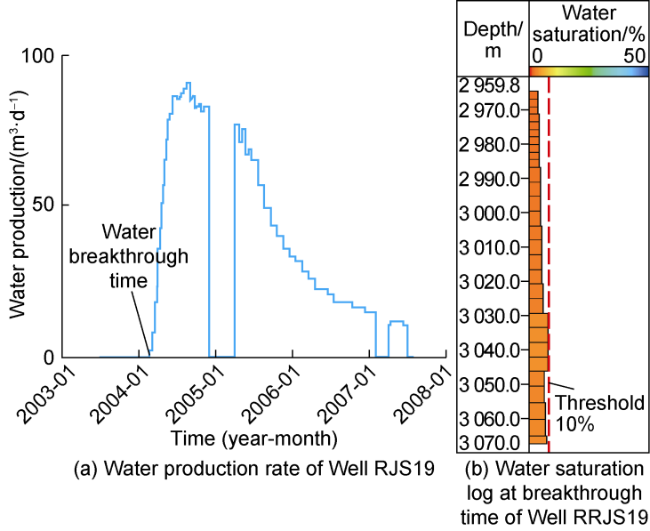

Fig. 10. Empirical threshold estimation using base case water saturation log at the time of water breakthrough. |

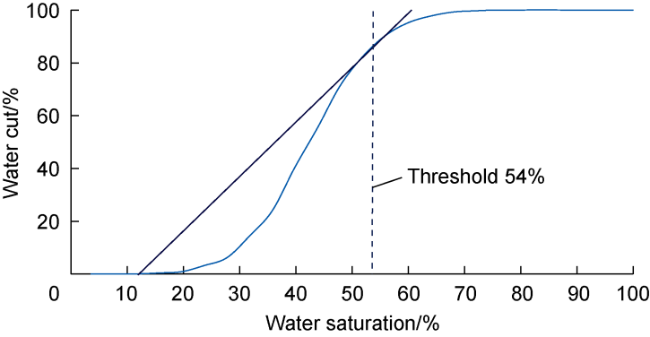

Fig. 11. Water saturation threshold using Welge method. |

3. Method application and discussion

3.1. Assessment and evaluation of classification metrics

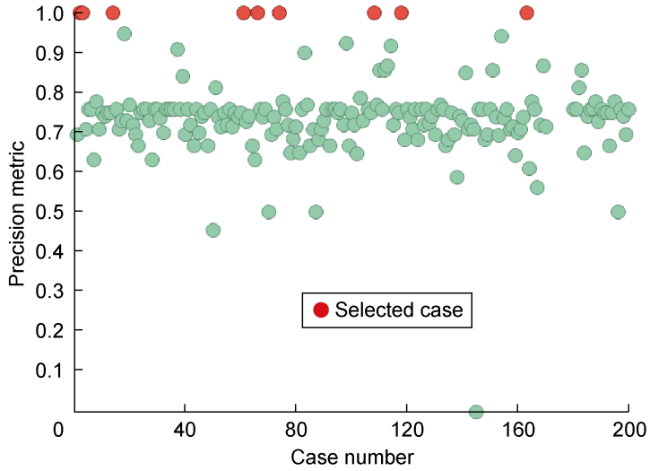

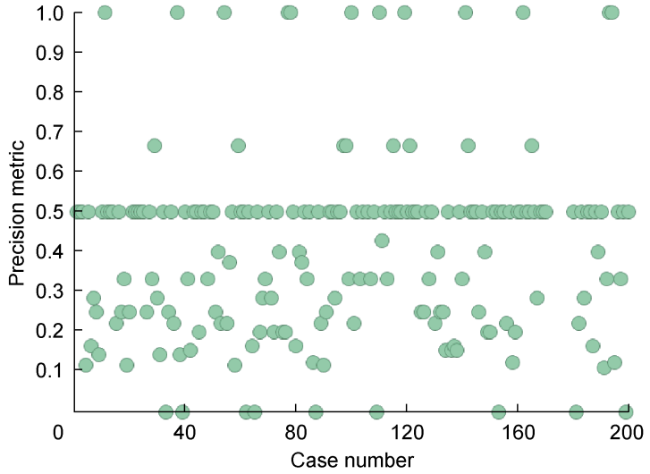

3.1.1. Precision

Fig. 12. Precision scores of all 200 models in Group A. |

Fig. 13. Precision scores of all 200 models in Group B. |

3.1.2. Accuracy, F-Measure and Recall

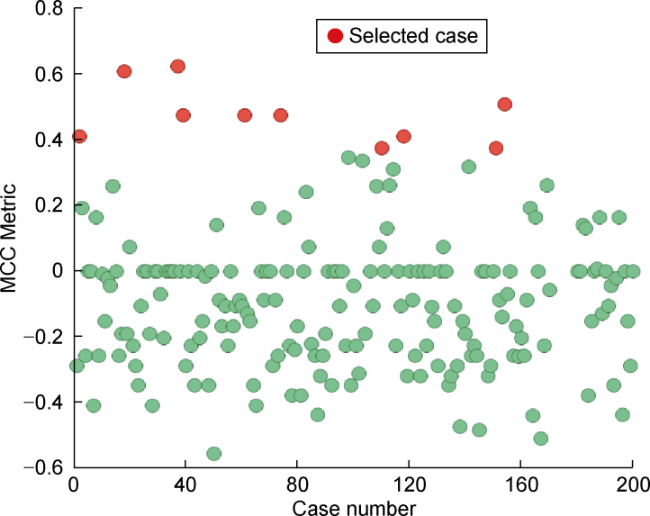

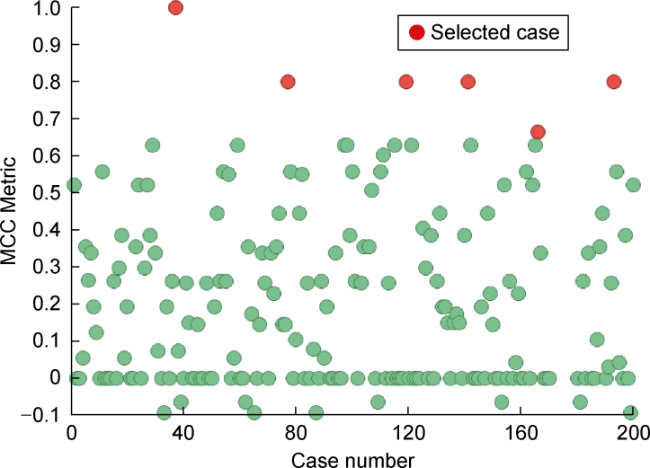

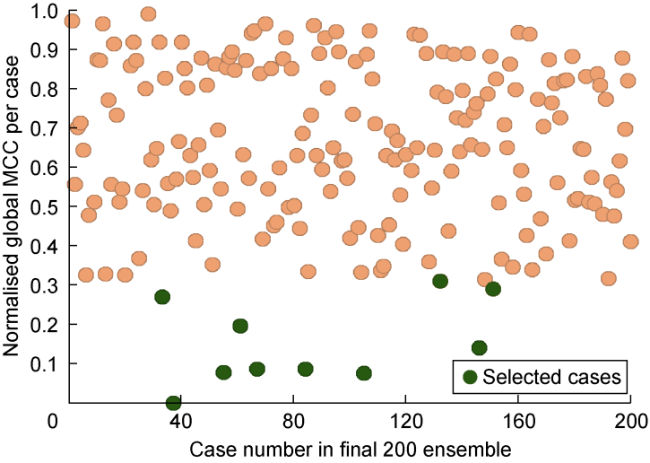

3.1.3. Matthew correlation coefficient

Fig. 14. MCC scores of all 200 models in Group A. |

Fig. 15. MCC scores of all 200 models in Group B. |

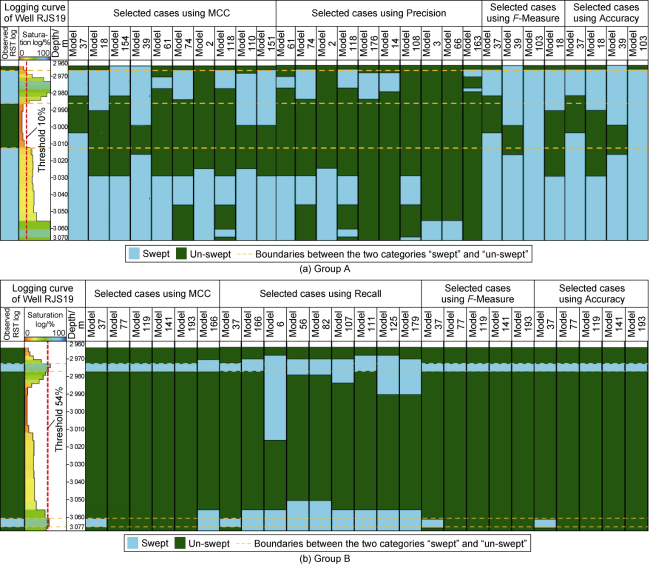

3.1.4. Binary metrics assessment summary.

Fig. 16. Evaluation of selected model cases for each metric vs. observed binary RST logs. |

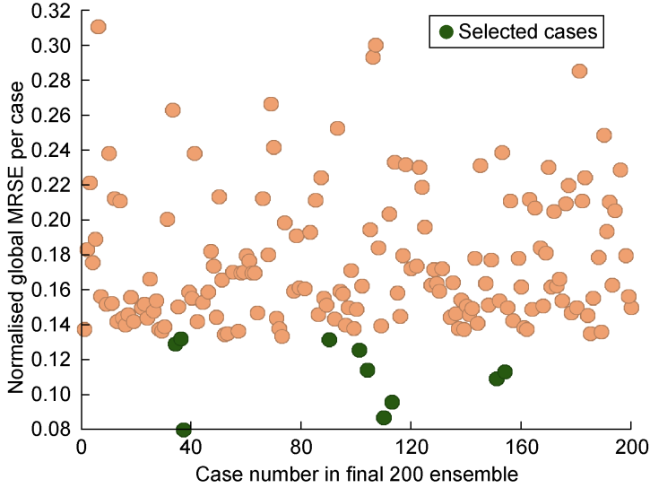

3.2. Comparing proposed RST methodology versus conventional history matching approach

Fig. 17. Global misfits of 200 cases using proposed method. |

Fig. 18. Global misfits of 200 cases using conventional method. |

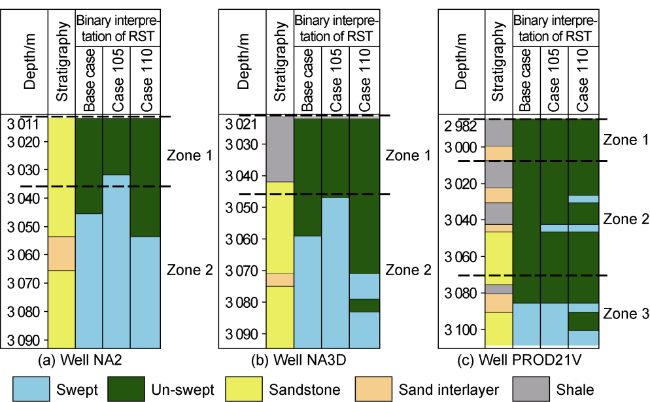

Fig. 19. Binary RST logs of top raked cases for the representative wells. |

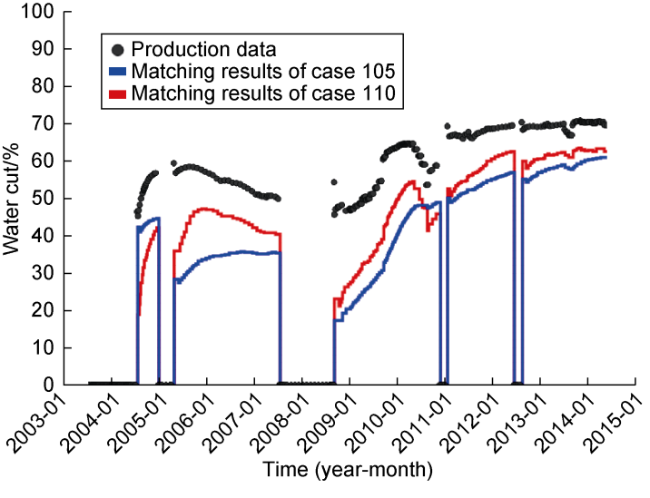

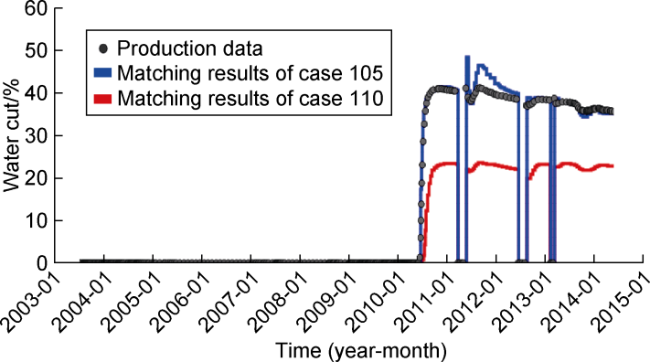

Fig. 20. Well NA2 water cut. |

Fig. 21. Well PROD021V water cut. |

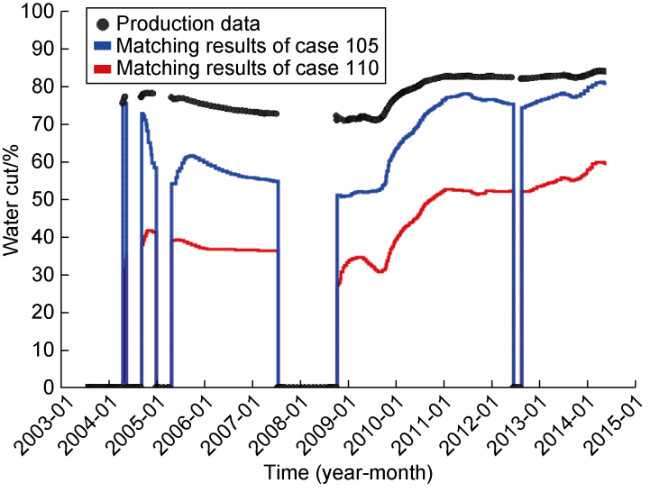

Fig. 22. Well NA3D water cut. |

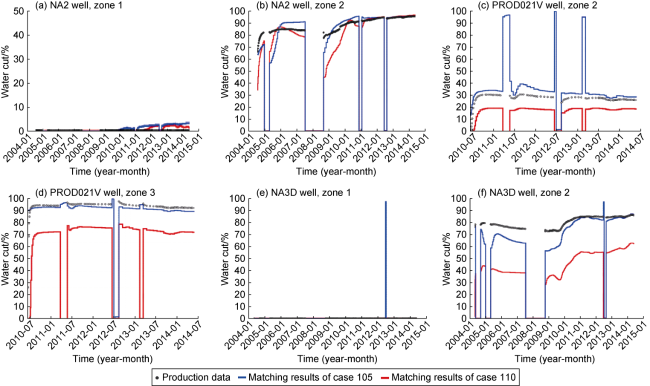

Fig. 23. Water cut per zone for wells NA2, NA3D and PROD021V using conventional and proposed methodologies. |