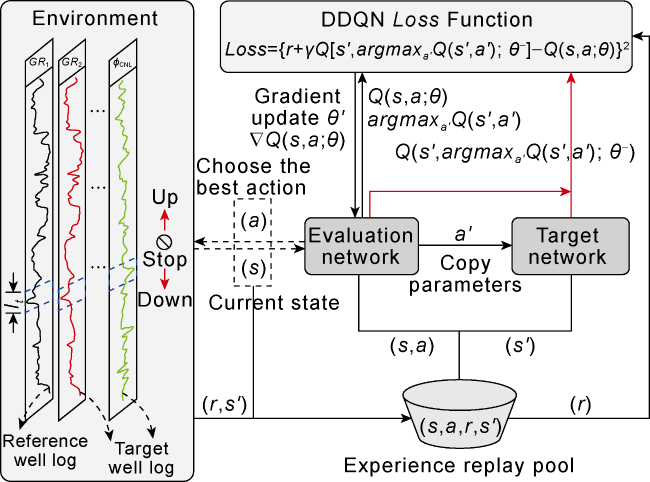

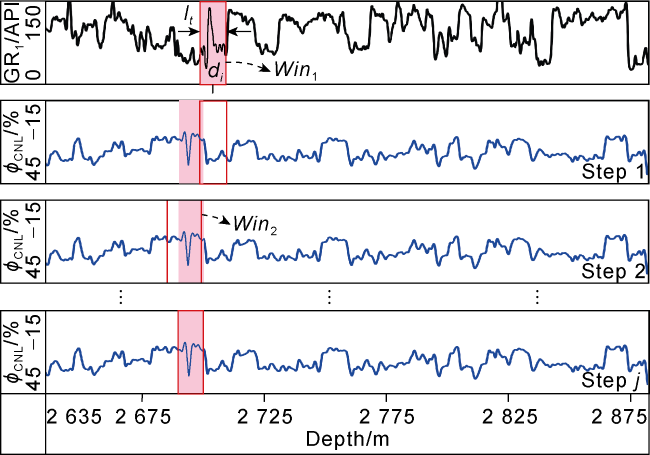

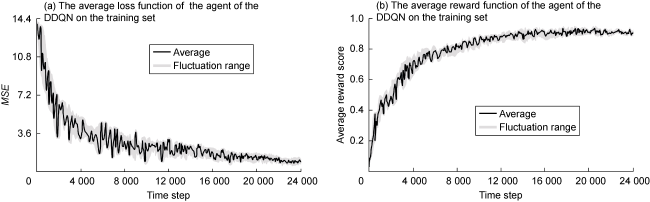

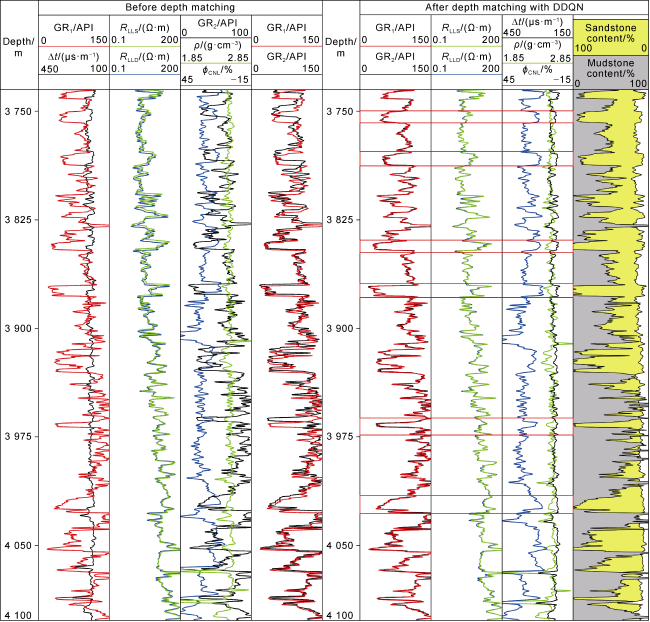

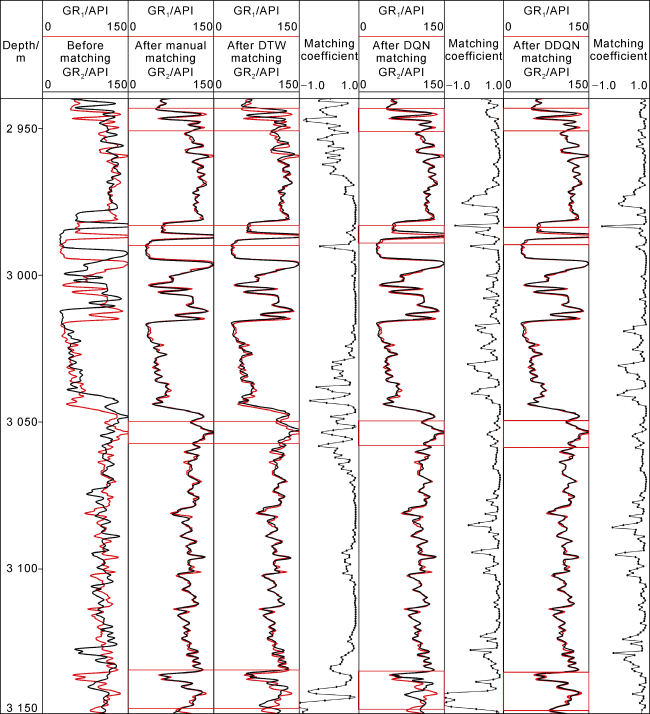

In this way, in the multi-agent depth matching task, each agent at time

t obtains a depth matching state by observing the feature sequences of well logs through the dual sliding windows. The first type of agent responsible for the depth matching between GR well logs makes decisions in a predefined order, that is to choose an action (

) based on the

ε-greedy strategy

[26] to act on the environment. Subsequently, the second type of agent, responsible for depth matching between the well logs

ϕCNL,

ρ, Δ

t, etc., and

GR1, selects an action

based on the decision-making result of the first type of agent to act on the well log to be matched. At time

t, each agent can update to the next state (

s') and receive a feedback reward (

r) after interacting with the environment. Then the experience replay pool (

Dt) collects and stores the experience samples

of agents at each time

t. As shown in

Fig. 3, during the model training process, DDQN randomly selects a batch of sample data from

Dt. It calculates the

Q-value

Q(

s,

a;

θ) for the selected action (

a) in the current state through the evaluation network, and estimates the

Q-value

of selected action (

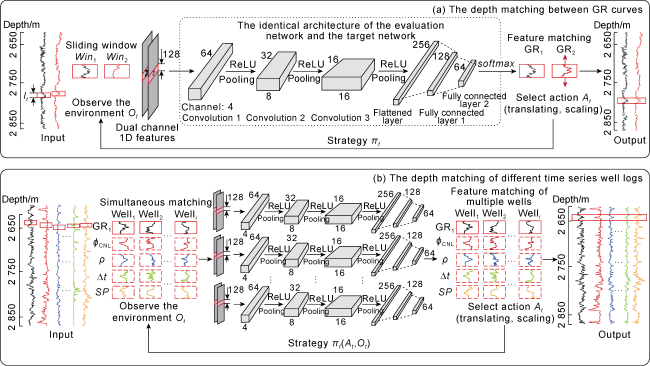

a') in the next state through the target network. This dual-network structure has the same architecture, and includes three convolutional layers (

Fig. 4), with each convolutional layer followed by a nonlinear activation function (ReLU) and a max-pooling layer, and the features are integrated through a fully connected layer to output the

Q-values. Then, the difference between the

Q-values of the evaluation network and the target network under the current strategy is calculated through a loss function (Eq. (5)). By minimizing the loss function, it is used to guide the optimization of the neural network, thereby learning a better matching strategy. Finally, the current evaluation network parameter (

θ) is updated to

θ' through a stochastic gradient descent (Eq. (6)) for the next round of training and learning. By repeating the above process, each agent can learn how to take the optimal action to complete depth matching for multiple well logs.